Introduction

GPT-4o (Omni) is a big step forward in human-computer interaction, combining multiple features into one model.

Chat GPT 4o, where the “o” stands for “omni,” combines voice, text, and vision into one model. This makes it faster than the previous version. The company said the new model is twice as fast and much more efficient.

Previous Voice Mode Challenges

Before GPT-4o, Voice Mode used a three-step pipeline for conversational AI:

- Audio to Text: A simple model transcribed audio input to text.

- Text Processing: GPT-3.5 or GPT-4 processed the text to generate a response.

- Text to Audio: Another simple model converted the text response back to audio.

This method had some issues: GPT-3.5 took 2.8 seconds, while GPT-4 took 5.4 seconds.

- Loss of Information: The primary model (Chat GPT 4) could not directly process audio nuances, such as tone, multiple speakers, or background noises.

- Limited Expressiveness: It couldn’t output laughter, singing, or express emotions, reducing the naturalness of interactions.

Innovations with Chat GPT 4-o

Chat GPT 4o (Omni) is a new version of GPT-4 that makes interacting with computers much more natural. Here’s what makes it special:

- All-in-One Processing: Unlike older versions that used separate steps, GPT-4o handles text, audio, images, and video all in one go. It can therefore grasp and react in a more natural and detailed manner. Regarding understanding and talking about images, Chat GPT 4o is revolutionary.

Photograph a menu in a foreign language, then Chat GPT 4o can not only translate it but also offer ideas for what to try and historical context on the cuisine. This improved visual awareness creates a universe of possibilities and makes travel and research more interesting and instructive.

- Real-time conversations and Interactions: It can quickly respond to spoken inputs, almost as fast as a human, making conversations feel more real.

Soon, you’ll be able to have natural voice conversations and even show ChatGPT a live sports game to ask about the rules. This new Voice Mode is launching in alpha in the coming weeks, with early access for Plus users. This is a fascinating step towards having artificial intelligence increasingly present in every day life.

- Cheaper and More Efficient: GPT-4o is faster and costs half as much to use, making it easier for more people and businesses to access.

- Better at Seeing and Hearing: It’s much better at understanding pictures and sounds, which is great for things like creating multimedia content, virtual reality, and advanced customer service.

These are some Chat GPT 4-o use cases.

Customer Service: Imagine a customer service agent who handles tough issues effortlessly. GPT-4o can power such an agent.

Example: It can help troubleshoot a faulty iPhone by guiding the user through steps to reset it or diagnose the issue, providing detailed explanations and support.

Interview Preparation: Need help getting ready for an interview? ChatGPT can now analyze your appearance and suggest what to wear.

Example: If you show it your outfit, it can recommend a more professional look or suggest colours that are more suitable for a formal interview setting, offering more than just typical interview tips.

Entertainment: Looking for game night ideas? GPT-4o can recommend games for the whole family and even act as a referee.

Example: It could suggest a fun board game, explain the rules to everyone, and keep track of the score, making your social gatherings more fun.

Accessibility for People with Disabilities: In partnership with BeMyEye, GPT-4o can assist visually impaired users.

Example: It can help someone navigate a busy street by describing their surroundings and providing directions. It can also assist in hailing a taxi by identifying nearby options and guiding the user through the process, making everyday tasks easier and more accessible.

What Will the Free Uses Get?

With GPT-4o, Free users will get:

- GPT-4 level intelligence: You’ll experience the same high-level intelligence as the premium models, giving you smarter and more accurate responses.

- Responses from the model and the web: Get answers from GPT-4o’s knowledge and real-time information available on the web.

- Data analysis and chart creation: Easily analyze your data and generate charts, making it simpler to visualize and understand complex information.

- Chat about your photos: Upload photos and chat about them. GPT-4o can help you understand, describe, or get information about what’s in your pictures.

- File uploads for help with summaries, writing, or analysis: Upload documents and GPT-4o will assist you by summarizing content, helping with writing, or analyzing the data within.

- Access to GPTs and the GPT Store: Discover and use various specialized GPTs for different tasks and access the GPT Store for more tools and enhancements.

- A better experience with Memory: GPT-4o can remember previous interactions to provide more personalized and context-aware responses, making your experience smoother and more tailored to your needs.

Is there any picture limits on Chat gpt 4?

Yes, ChatGPT-4 has some limitations when generating images:

- One Image at a Time – You can request only one image per prompt.

- Resolution Limits – The available sizes are:

- 1024×1024 (square)

- 1792×1024 (wide)

- 1024×1792 (tall)

- No Real People or Copyrighted Characters – It cannot create images of specific individuals or copyrighted content.

- Style Restrictions – It avoids mimicking modern artists’ styles.

- Text in Images is Limited – Text inside images may not always be clear or accurate.

ChatGPT 4.5 (Orion): Just an Upgrade?

OpenAI introduced ChatGPT 4.5, code-named Orion—its most advanced AI model yet—on February 27, 2025. Unlike past revisions, this isn’t a small change. Improved reasoning, a better awareness of human emotions, and support of images and files define ChatGPT 4.5.

But what does this mean for you? Is it worth the hype, and how does it compare to Chat GPT 4? Let’s break it down in simple terms.

What is ChatGPT 4.5 (Orion)?

ChatGPT 4.5, or Orion, is OpenAI’s newest and most powerful AI model to date. It improves upon Chat GPT 4 with:

✔ Better emotional intelligence – It understands emotions and social cues better, making conversations feel more human-like.

✔ Fewer mistakes (hallucinations) – It is less likely to generate incorrect or misleading information.

✔ Multimodal capabilities – It can process text, images, and files, making it more versatile.

✔ Improved logical reasoning – It handles complex questions, like math and science problems, more effectively.

Currently, ChatGPT 4.5 is available as a research preview for ChatGPT Pro users and select developers, with wider access expected soon.

What’s New in ChatGPT 4.5?

Compared to previous versions, Orion brings several upgrades that make AI conversations smoother and more reliable. Here’s what’s different:

1. Advanced Reasoning

ChatGPT 4.5 now thinks through problems step by step before answering, making it better at handling complex STEM and logic questions than earlier versions.

2. Better Emotional Awareness

This model is designed to recognize and respond to social cues, making conversations feel more natural and engaging.

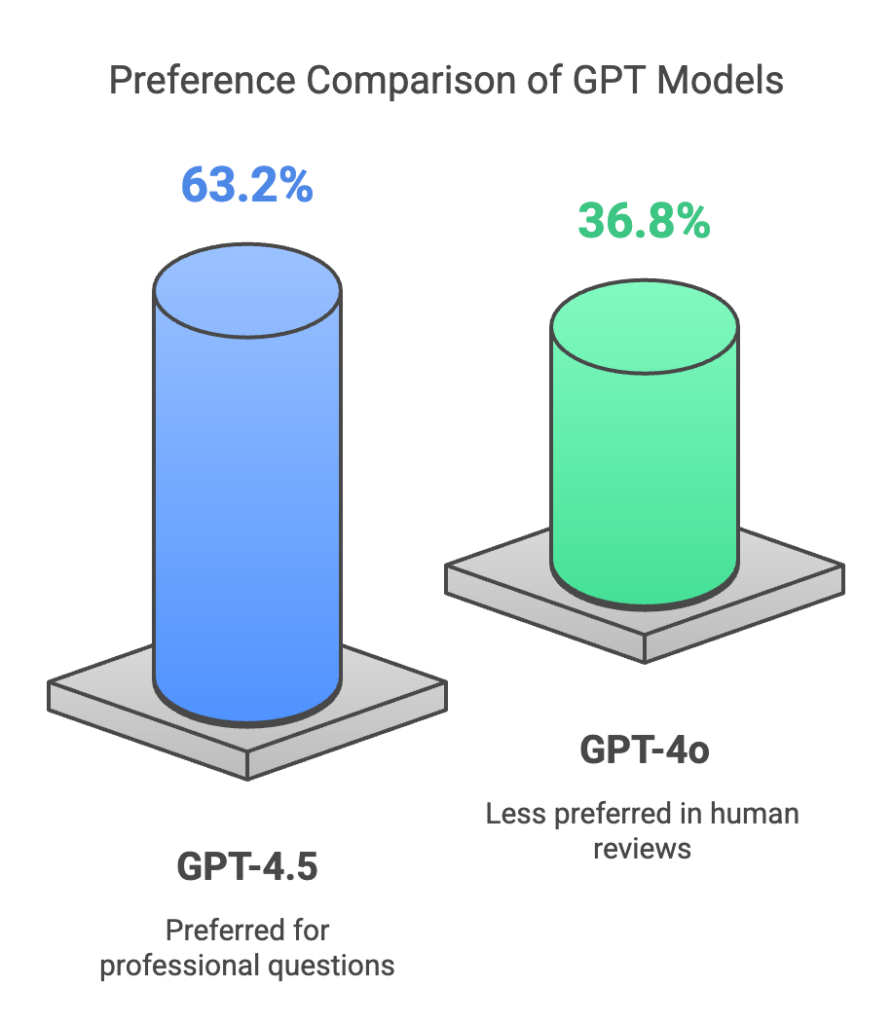

3. Lower Hallucination Rate

A major issue with AI is that it sometimes makes up facts (hallucinations). ChatGPT 4.5 significantly reduces this problem:

- ChatGPT 4.5 hallucination rate: 37.1%

- Chat GPT 4o hallucination rate: 61.8%

This means ChatGPT 4.5 is less likely to generate misleading information compared to previous models.

4. Multimodal Capabilities

You can now upload and interact with images and files, making AI more useful for tasks beyond just text.

5. Improved Context Understanding

It has been trained on data from smaller models, improving its ability to follow conversations and understand context better.

How Does Chat GPT 4.5 Perform?

Compared to its predecessors, ChatGPT 4.5 performs better in general knowledge and conversation flow but still struggles in some logic-heavy tasks like advanced math and programming.

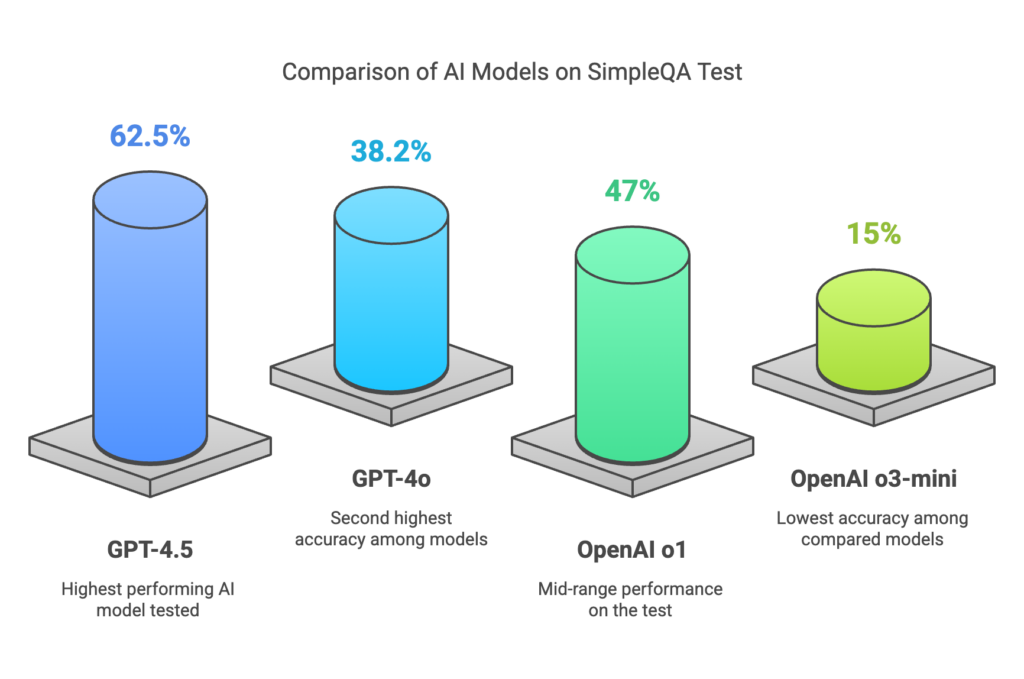

Accuracy & Hallucinations Comparison

| AI Model | Accuracy (SimpleQA Test) | Hallucination Rate |

|---|---|---|

| ChatGPT 4.5 | 62.5% | 37.1% |

| ChatGPT-4o | 38.2% | 61.8% |

| OpenAI o1 | 47% | N/A |

| OpenAI o3-mini | 15% | N/A |

ChatGPT 4.5 is much more accurate, 37.1% of its responses may still be incorrect, so fact-checking is still necessary.

Reasoning & Logic Performance

| Task | GPT-4.5 | GPT-4o | OpenAI o3-mini |

|---|---|---|---|

| Science (GPQA) | 71.4% | 53.6% | 79.7% |

| Math (AIME ‘24) | 36.7% | 9.3% | 87.3% |

| Coding (SWE-Lancer Diamond) | 32.6% | 23.3% | 10.8% |

ChatGPT 4.5 outperforms GPT-4o in science and general knowledge but it still struggles with complex reasoning tasks.

What Reddit Thinks

Who should use GPT-4.5?

✔ If you want a more human-like conversation experience

✔ If you work with multiple file formats (text, images, files)

✔ If you need better accuracy and fewer hallucinations

Who might stick with GPT-4?

✔ If you need strong problem-solving in math, science, and coding

How to Use ChatGPT 4.5

As of now, ChatGPT 4.5 is gradually rolling out. Here’s how you can access it:

- Pro Users: Already have access to ChatGPT 4.5.

- Plus Users: Expected to get access soon.

- Enterprise & Education Users: Will receive access in the coming weeks.

You can select GPT-4.5 from the model picker and start using it with all of ChatGPT’s latest features.

Will There Be a ChatGPT-5?

With every new version, people ask: What’s next?

OpenAI hasn’t officially announced ChatGPT-5, rumors suggest that it could bring:

🧠 True multimodal capabilities (text, image, video, and audio processing)

⚡ Faster processing with even lower hallucination rates

🤖 Better reasoning and logic for math, science, and programming

But for now, ChatGPT 4.5 (Orion) is the best OpenAI model available—though it’s still far from perfect.

Should You Upgrade?

- If you want better accuracy, fewer errors, and more natural conversations, ChatGPT 4.5 is worth trying.

- If you need heavy logic, programming, or math reasoning, other models may be better.

At the end of the day, ChatGPT 4.5 is an impressive step forward, but it’s not the AI revolution just yet. Let’s see what ChatGPT-5 has in store for the future!

Potential and Future Exploration

AI has been developing fast enough to surpass our projections. 2024 saw big advancements, from Devin AI to the advanced capabilities of Chat GPT 4o, the progress is both remarkable and transformative.

Chat GPT 4o is the first model to combine all these modalities, thus its whole potential and constraints are yet under investigation. More natural and expressive AI interactions should be unlocked by this new integrated approach, so enabling deeper involvement and richer user experiences.